Find Your Best Model and Put It Into Production Effortlessly

With Striveworks, start tracking model performance on real-world data instantly. We make it simple to deploy quality models, generate insights, and monitor performance—anywhere from the enterprise to the edge.

- Compare model performance and select your best candidate before you deploy.

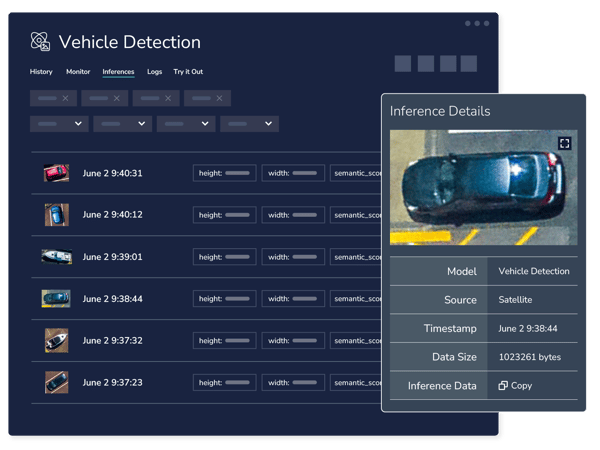

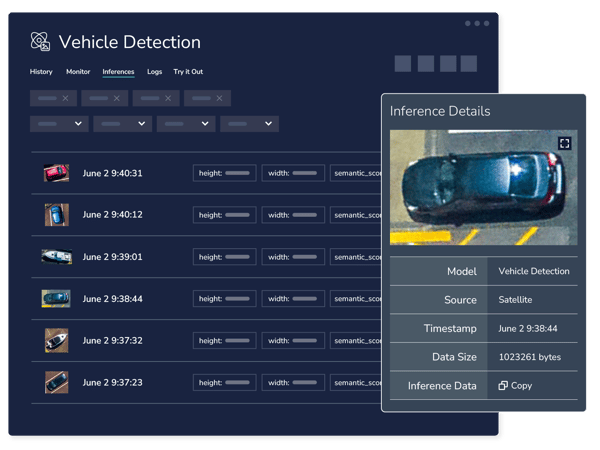

- Automatically capture all inferences and metadata from your models the moment they enter production.

- Monitor your production models continuously for unexpected patterns.

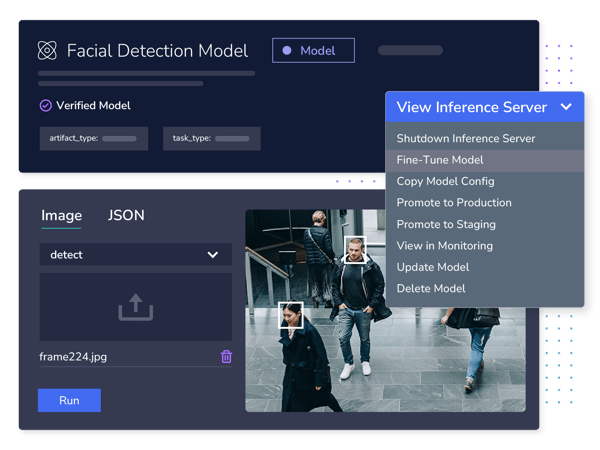

Versatile, Efficient Model Deployment

Use Striveworks to easily put your best models into production and connect them to production workflows.

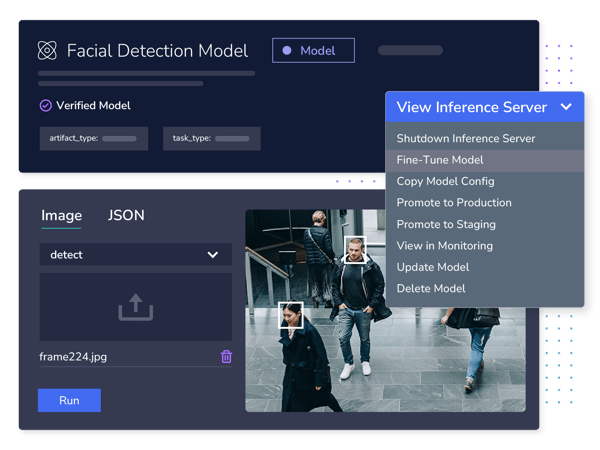

Deploy With One Click

Production-Grade Inference Servers

Integrated Inference Store

Deploy Your Best Model

- Of all the models tested for a given data source, which performs best?

- How does this model perform across disparate datasets?

- How can I use prior evaluations to select a model for a future ML pipeline?

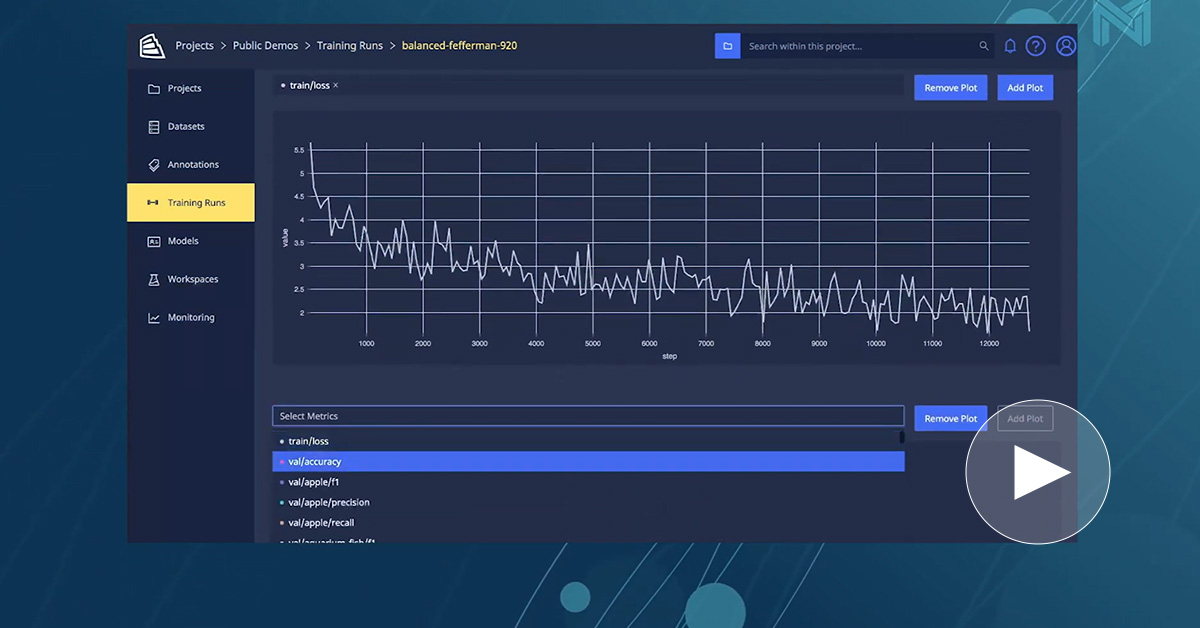

Get Fine-Grained Observability on Model Performance

- Automate drift detection to compare production against training performance.

- Evaluate model performance against any associated metadata.

- Curate new training datasets quickly from relevant production data.

MLOps Deployed Where You Need It

- On-prem

- Air-gapped

- Restricted networks through IL7

- Virtual private cloud

- Hybrid cloud

- Managed cloud

- Multi cloud

Customize Workflows With Open Integrations

Use Striveworks to drive value in any ML workflow. Through our open APIs, your team can leverage Striveworks as a seamless part of any MLOps stack.

Our abstracted, service-based architecture lets you construct the workflow you want from best-of-breed technologies and open-source projects, disappearing MLOps into the background.

Build, Deploy, and Maintain ML in One Platform

Don’t drop the ball after deployment. With Striveworks, your team can collaborate to build, deploy, and maintain all of your models in an integrated, coherent platform.

The Striveworks end-to-end platform gives you enhanced features for model testing and evaluation, performance monitoring, model drift detection, inference storage, and model remediation. Our connected tools are the key to keeping your models performant and reliable indefinitely.

Related Resources

Are MLOps Disappearing?

Build and deploy models in a low-code to no-code way? We sat down with Neural Magic to talk about what we mean by “disappearing MLOps” and how, together, we help customers accelerate their ML life cycles for faster business impact.

Make MLOps Disappear

Discover how Striveworks streamlines building, deploying, and maintaining machine learning models—even in the most challenging environments.

Your AI Models Are Live. Now What?

91% of models fail over time. Learn how to spot, retrain, and redeploy failing models effortlessly using Striveworks.